5 AI 3D Breakthroughs in 2025 That Changed Production Forever

Remember when AI-generated 3D models looked like melted Play-Doh? That was just two years ago. Today, I'm watching AI 3D model generators create production-ready assets in seconds, complete with clean topology and PBR textures. The transformation has been nothing short of revolutionary.

2025 marks the year AI 3D finally grew up. No more blob-like meshes or unusable geometry—we're talking about tools that professional studios are integrating into their pipelines. As someone who's tested dozens of these platforms, I can tell you the leap from 2024 to 2025 feels like jumping from dial-up to fiber optic.

Let me walk you through the five breakthroughs that turned AI 3D breakthroughs 2025 from a buzzword into a production reality.

The New Reality: Where AI 3D Stands in Late 2025

The numbers tell the story. What used to take hours now happens in minutes—sometimes seconds. Text to 3D quality has reached a point where generated models go straight into production pipelines with minimal cleanup.

Here's what changed:

- Generation speed: 10-120 seconds for usable assets

- Topology quality: Clean, animation-ready meshes

- Texture fidelity: Full PBR materials generated automatically

- Integration: Direct export to major 3D software

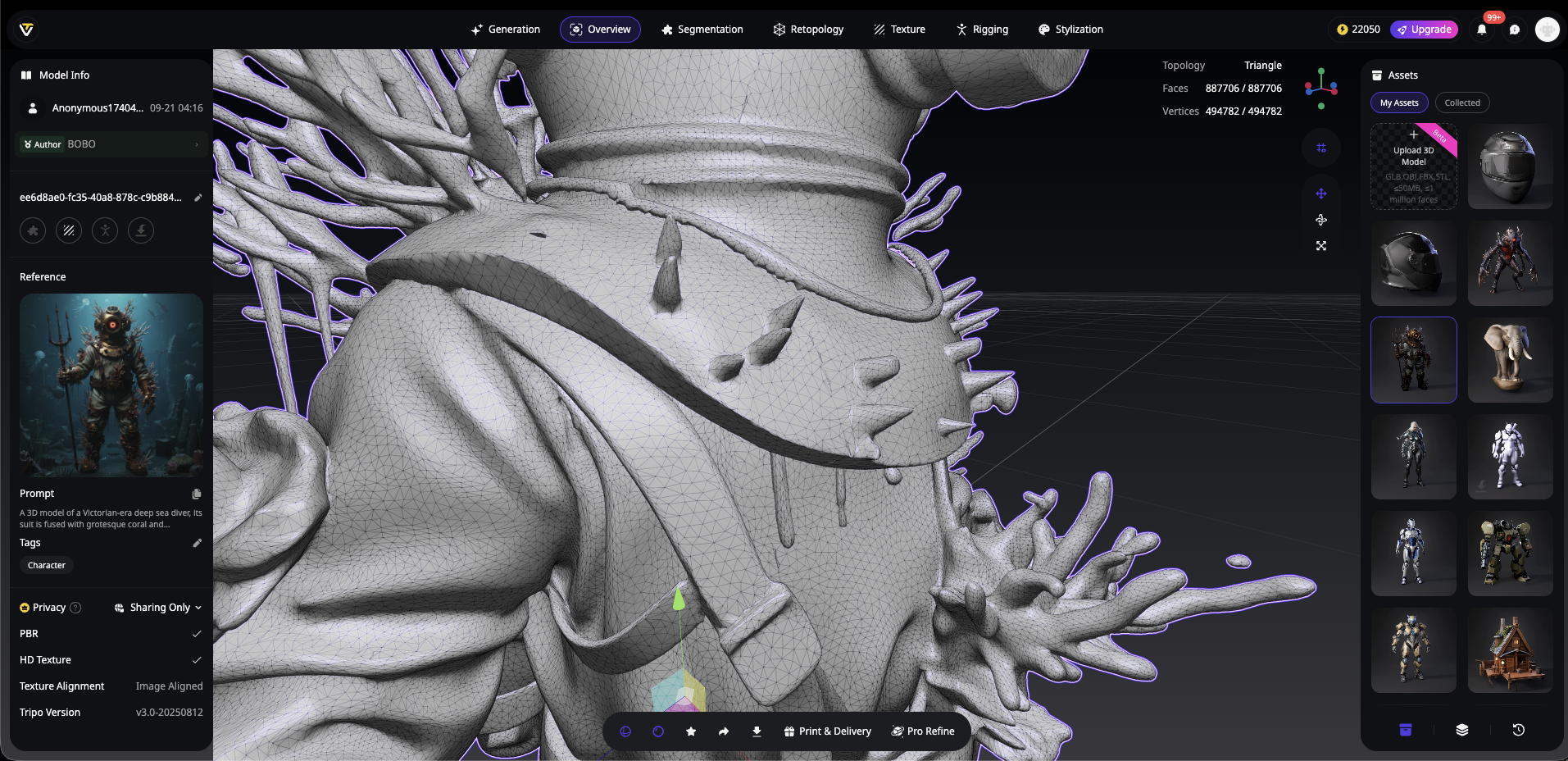

Tripo AI exemplifies this shift perfectly. Their Algorithm 3.0 beta, released in August 2025, generates models in just 10 seconds. But speed isn't the headline—it's the quality. Users report dramatically cleaner topology, sharper geometry, and intelligent part segmentation that actually makes sense.

The old workflow? Generate, retopologize, UV unwrap, texture, rig. The new workflow? Generate and refine. That's it.

The Top 5 AI 3D Breakthroughs of 2025

For a clear view of how far AI 3D models have progressed in quality, topology, texture fidelity, speed, and production readiness, platforms like Top3D.ai provide valuable insights and comparisons of the latest tools.

AI Texturing & Material Generation (PBR)

Remember spending hours painting textures in Substance Painter? AI texturing PBR systems now generate complete material sets from simple text prompts or reference images.

What changed: AI doesn't just slap colors onto models anymore. These systems understand material properties—roughness, metallic values, normal maps, ambient occlusion. They generate full PBR texture sets that respond correctly to lighting.

Why it matters: This breakthrough collapsed a multi-software workflow into a single step. Artists can iterate through dozens of material variations in the time it used to take to create one.

Tripo AI's Magic Brush showcases this perfectly. Upload any model, describe the texture you want, and watch as the AI applies physically accurate materials across the entire mesh. I've seen it turn a bland character model into a weathered bronze statue or sleek sci-fi armor in seconds.

Real-world impact:

- Texture creation time: Reduced by 90%

- Quality consistency: Maintains style across entire projects

- Iteration speed: Test 50 variations in 5 minutes

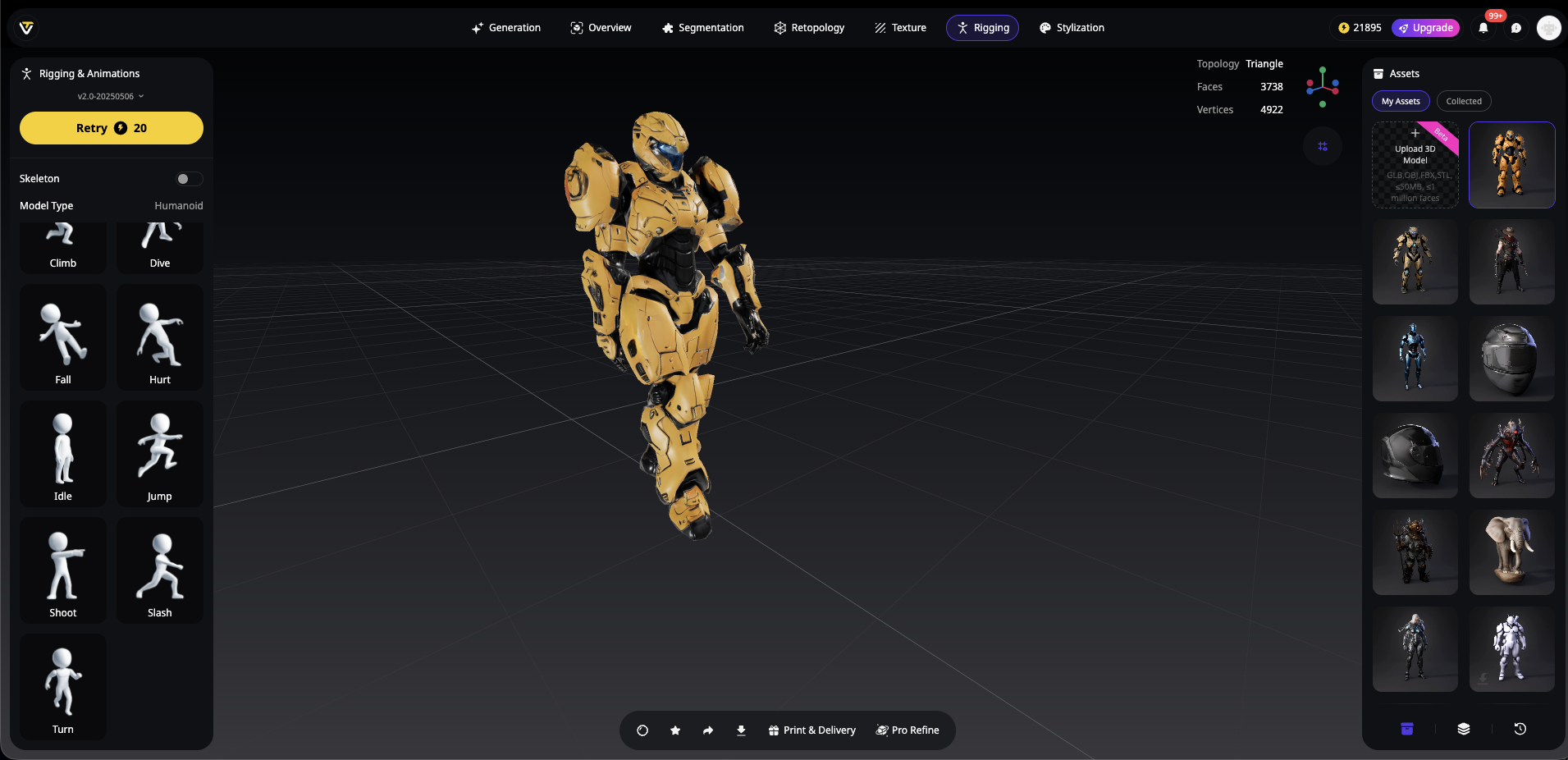

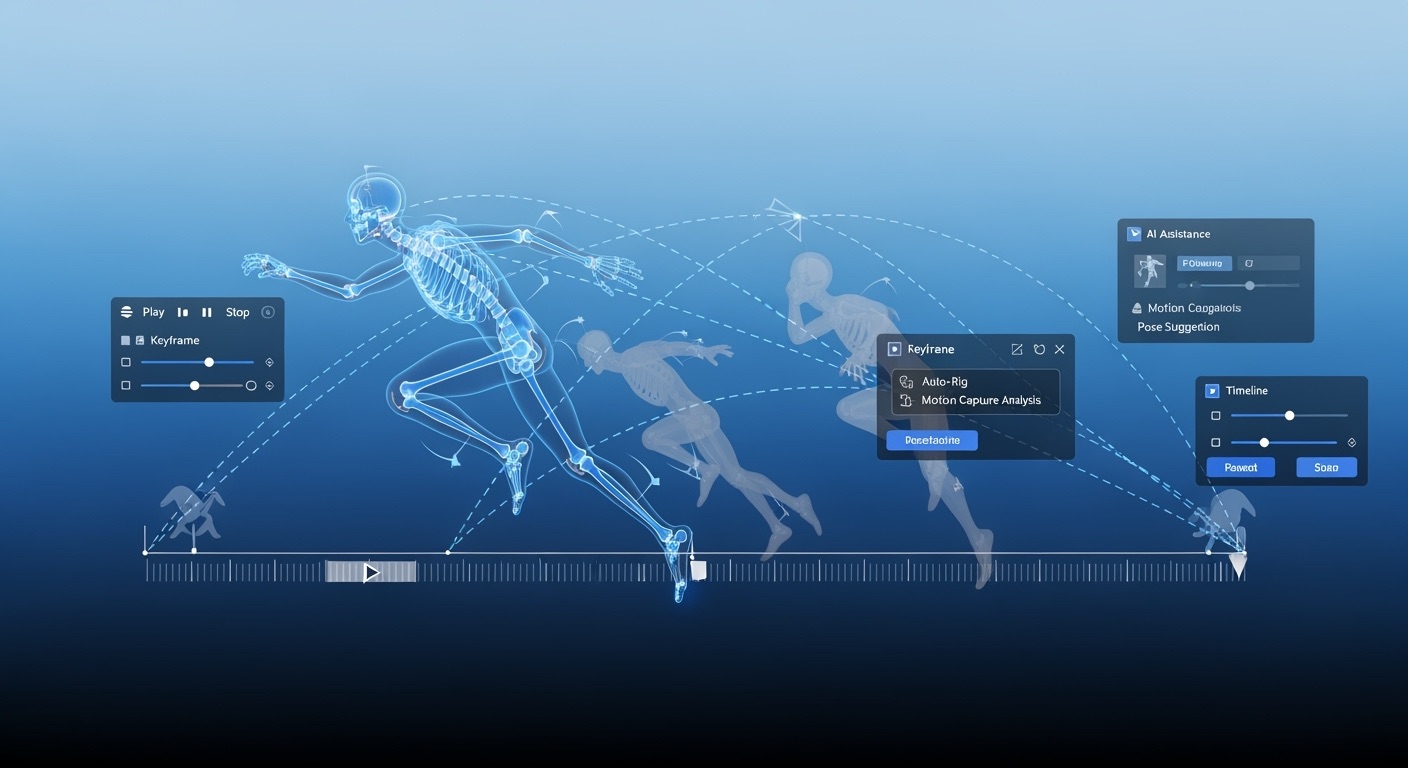

AI-Powered Rigging & Animation (Generative 4D)

Static models are yesterday's news. Generative 4D represents the next frontier—AI that understands how objects should move through time.

What changed: 2025's breakthrough isn't just about generating rigs. It's about AI understanding anatomy, physics, and motion principles. These systems create animation-ready skeletons that actually deform correctly.

Why it matters: Character rigging traditionally requires deep technical knowledge and hours of manual work. Now, AI analyzes your model's structure and creates professional-grade rigs automatically.

Tripo AI leads here with automatic T-pose generation and skeleton rigging. Their system recognizes humanoid forms, places joints correctly, and exports directly to Mixamo or game engines. I tested this with a complex character model—what would've taken me 4 hours took 30 seconds.

Key advances:

- Automatic weight painting that actually works

- Joint placement based on anatomical understanding

- Export-ready for major animation platforms

- Support for non-humanoid creatures

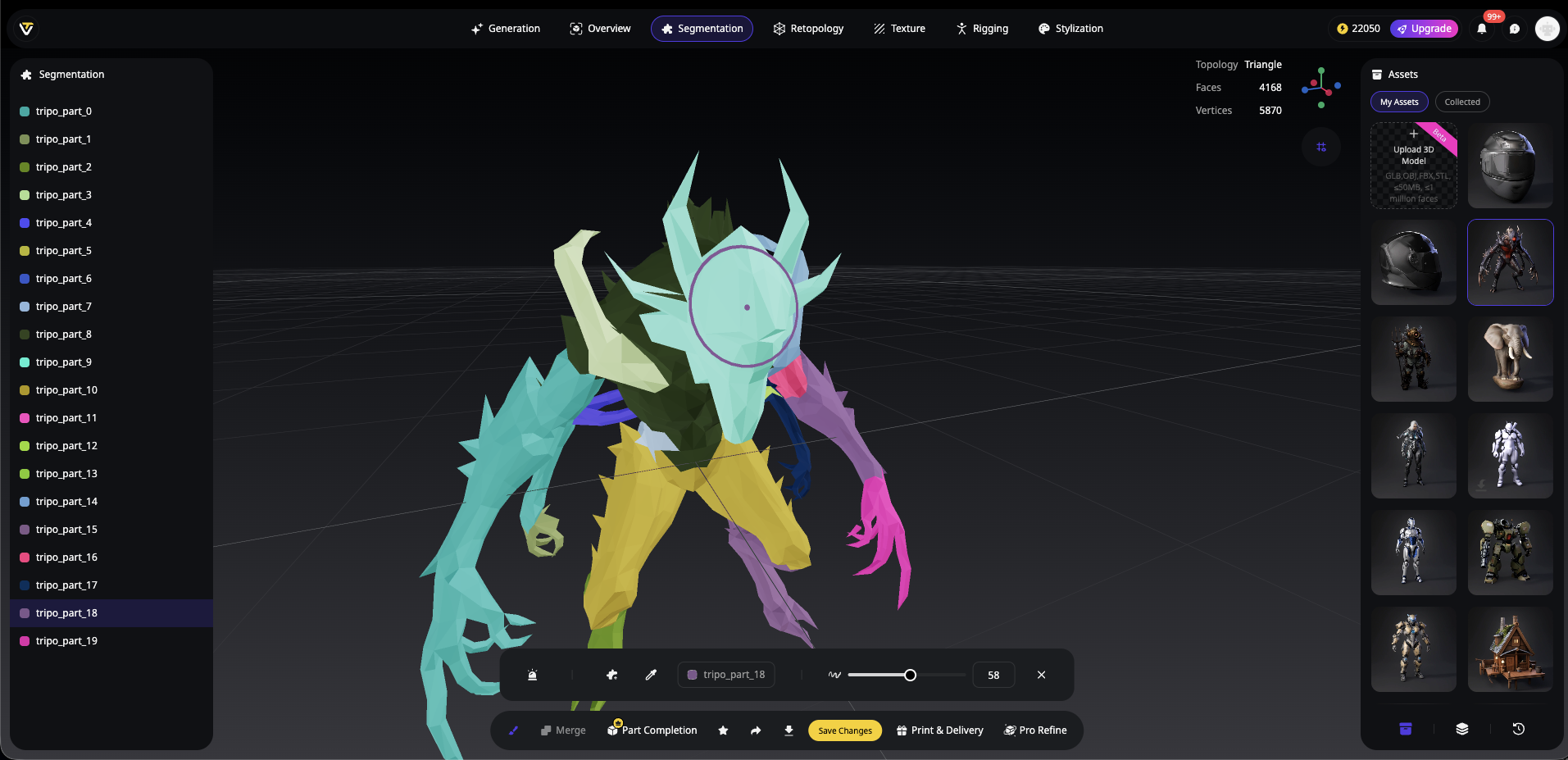

Context-Aware Generation & Editing

Here's where things get really interesting. Inspired by image-side breakthroughs like Flux Kontext, AI 3D model generators now understand context.

What changed: Instead of regenerating entire models for small changes, AI now comprehends existing geometry and adds to it intelligently. Want to add armor to a character? The AI understands the body shape and creates fitting pieces.

Why it matters: This transforms AI from a one-shot generator into an iterative design partner. You're not starting over with each change—you're building on what works.

Tripo AI's Smart Part Segmentation exemplifies this approach. The system identifies distinct parts of your model (head, torso, accessories) and lets you modify them independently. Their integration with Flux and GPT-4o takes this further, understanding complex instructions like "make the armor more battle-worn but keep the underlying design."

Practical applications:

- Brand consistency across model variations

- Non-destructive editing workflows

- Style transfer between different models

- Intelligent scene population

Automated Retopology

This might be the most underappreciated breakthrough of 2025. AI retopology finally solved one of 3D's most tedious bottlenecks.

What changed: AI now understands edge flow, deformation requirements, and polygon budgets. It converts high-poly sculpts or messy scans into clean, game-ready topology automatically.

Why it matters: Manual retopology is mind-numbing work that can take days for complex models. Artists hate it. Studios budget heavily for it. AI just eliminated the problem.

Tripo Studio's 2025 retopology update delivers animation-aware topology in seconds. Their Smart Low Poly mode analyzes how a model will deform and places edge loops accordingly. I threw a 2-million poly sculpt at it—got back a clean 8K poly model ready for rigging.

Real-Time Photogrammetry (NeRFs & Gaussian Splatting)

The biggest surprise of 2025? Gaussian splatting real-time rendering went from research paper to production tool.

What changed: 3D Gaussian Splatting (3DGS) and Neural Radiance Fields (NeRFs) matured into practical tools. Real-world capture became as easy as shooting video, with instant photorealistic results.

Why it matters: This breakthrough bridges the gap between reality capture and usable 3D assets. Film sets, architecture firms, and game studios can now create perfect digital twins of real spaces in minutes.

The Gaussian Splat standardization town hall at SIGGRAPH 2025 highlighted how quickly this technology is maturing. NVIDIA's integration into their simulation APIs signals mainstream adoption is imminent.

Where Tripo AI fits: While Gaussian splatting excels at capturing reality, you still need clean meshes for many applications. Tripo AI complements these workflows by converting splat data into game-ready assets with proper topology and textures.

Current capabilities:

- Real-time capture and rendering

- Photorealistic quality

- Growing tool ecosystem

- Hybrid workflows with traditional meshes

What This Means for Creators

These five breakthroughs aren't isolated improvements—they're interconnected advances that fundamentally change how we create 3D content.

For indie developers: You can now build AAA-quality assets without a massive team. Image to 3D workflows let you concept and create in the same sitting.

For studios: Production pipelines compress from months to weeks. The question isn't "can we afford to make this?" but "how many variations do we want?"

For newcomers: The barrier to entry has essentially disappeared. If you can describe what you want, you can create it.

The Road Ahead

As I write this in late 2025, we're still processing how fast things changed. AI 3D model generators went from curiosity to critical infrastructure in less than a year.

What excites me most? We're just getting started. The convergence of these five breakthroughs creates compound effects we're only beginning to explore. When AI texturing meets context-aware editing meets automated retopology, entirely new workflows emerge.

Tripo AI sits at the center of this revolution, with over 2 million users generating 20+ million models. Their integrated approach—combining generation, texturing, rigging, and retopology in one platform—shows where the industry is heading.

Frequently Asked Questions

-

What is the biggest challenge still facing AI 3D generation?

- Even with these breakthroughs, perfect topology for complex articulated objects remains challenging. AI excels at common forms but struggles with unique mechanical designs or extreme deformations. Controllability at scale and real-time efficiency for massive scenes also need work.

-

Will AI 3D generation replace the need for 3D artists?

- Current evidence shows AI acts as a powerful co-pilot rather than a replacement. It accelerates ideation, texturing, rigging, and retopology, but expert direction remains critical for style decisions, quality control, and meeting specific production constraints. Artists who embrace these tools multiply their creative output.

-

How will AI 3D impact the metaverse or VR/AR?

- As Gaussian splatting and related pipelines mature, creating photorealistic virtual worlds becomes exponentially faster. We're seeing denser, more detailed environments with better performance. The combination of AI 3D model generators and real-time rendering tech will make truly immersive digital spaces commonplace.

Your Turn to Create

2025 proved that AI 3D isn't just about making things faster—it's about making the impossible possible. These five breakthroughs solved problems that plagued 3D artists for decades.

Whether you're texturing with AI texturing with Magic Brush, generating characters with automatic rigging, or exploring the latest in image to 3D conversion, the tools are ready. The question isn't whether AI can help your 3D workflow—it's how much time you'll save when you start using it.

Ready to experience these breakthroughs firsthand? Join 2+ million creators already using Tripo AI. See why 2025 became the year 3D creation changed forever.

Advancing 3D generation to new heights

moving at the speed of creativity, achieving the depths of imagination.